Alan Yu

I'm a recent graduate of MIT, where I did my MEng ('25) at LIDS advised by Luca Carlone. Before that, I did my B.S. in Artificial Intelligence ('24), where I was fortunate to work with Ge Yang, Phillip Isola, and John Leonard at CSAIL. My research is in robot learning, where I'm interested in foundation models, generative AI + simulation, and scaling up evaluation.

In my free time I enjoy swimming and attempting to play the guitar.

Recent Updates

(more)Research

Select projects are shown below; please see Google Scholar for more!

LucidXR: An Extended-Reality Data Engine for Robotic Manipulation

Conference on Robot Learning (CoRL), 2025

We build a data engine that features collecting data in simulation running untethered on a VR device. We then propose amplifying human-collected demonstrations and rendering with generative models to synthesize data for policy learning.

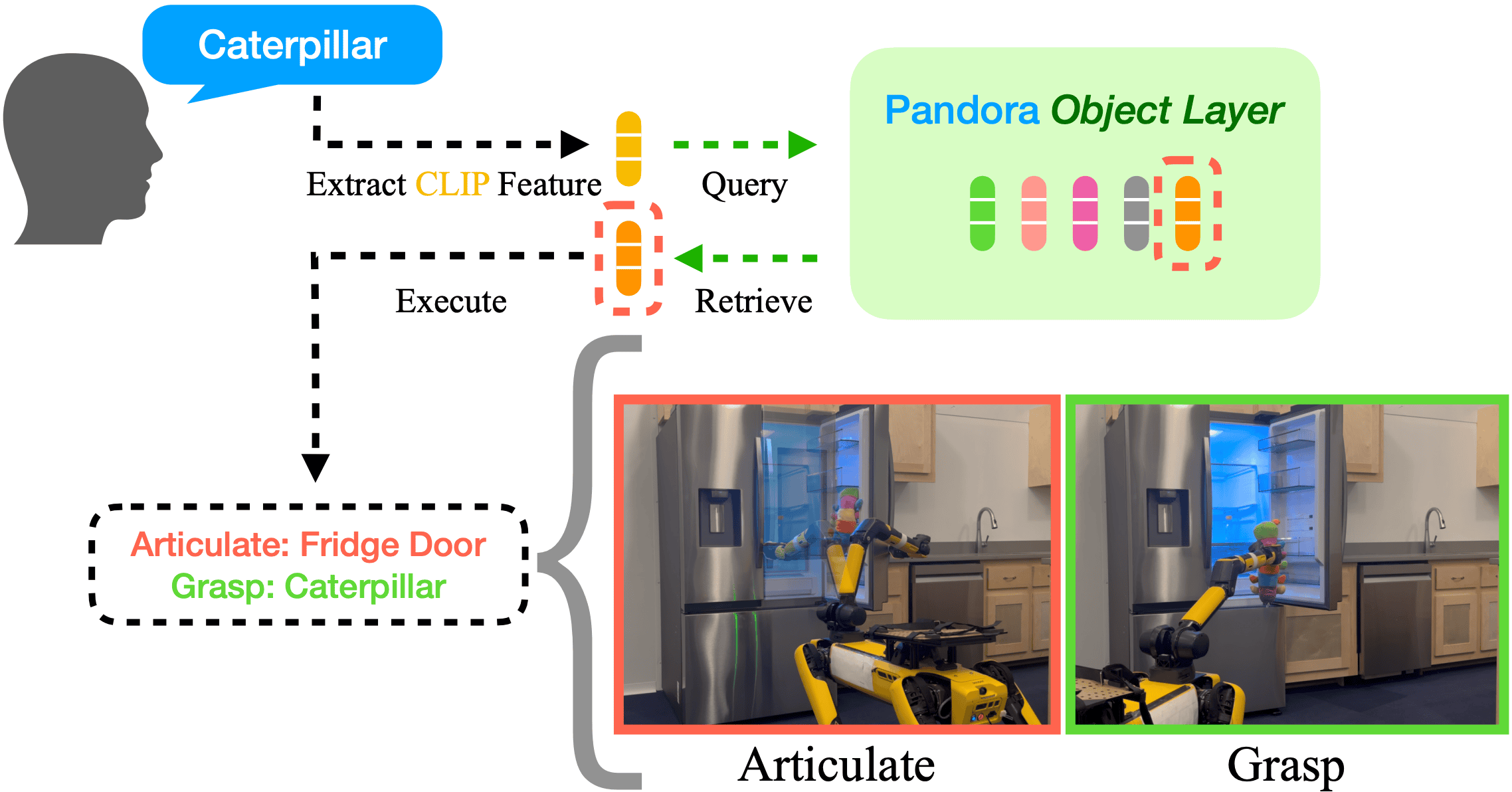

Pandora: Articulated 3D Scene Graphs from Egocentric Vision

British Machine Vision Conference (BMVC), 2025

We construct articulated scene graphs from egocentric vision with Project Aria glasses to model articulated and concealed objects. We demonstrate that the resulting scene graph can be used for object retrieval.

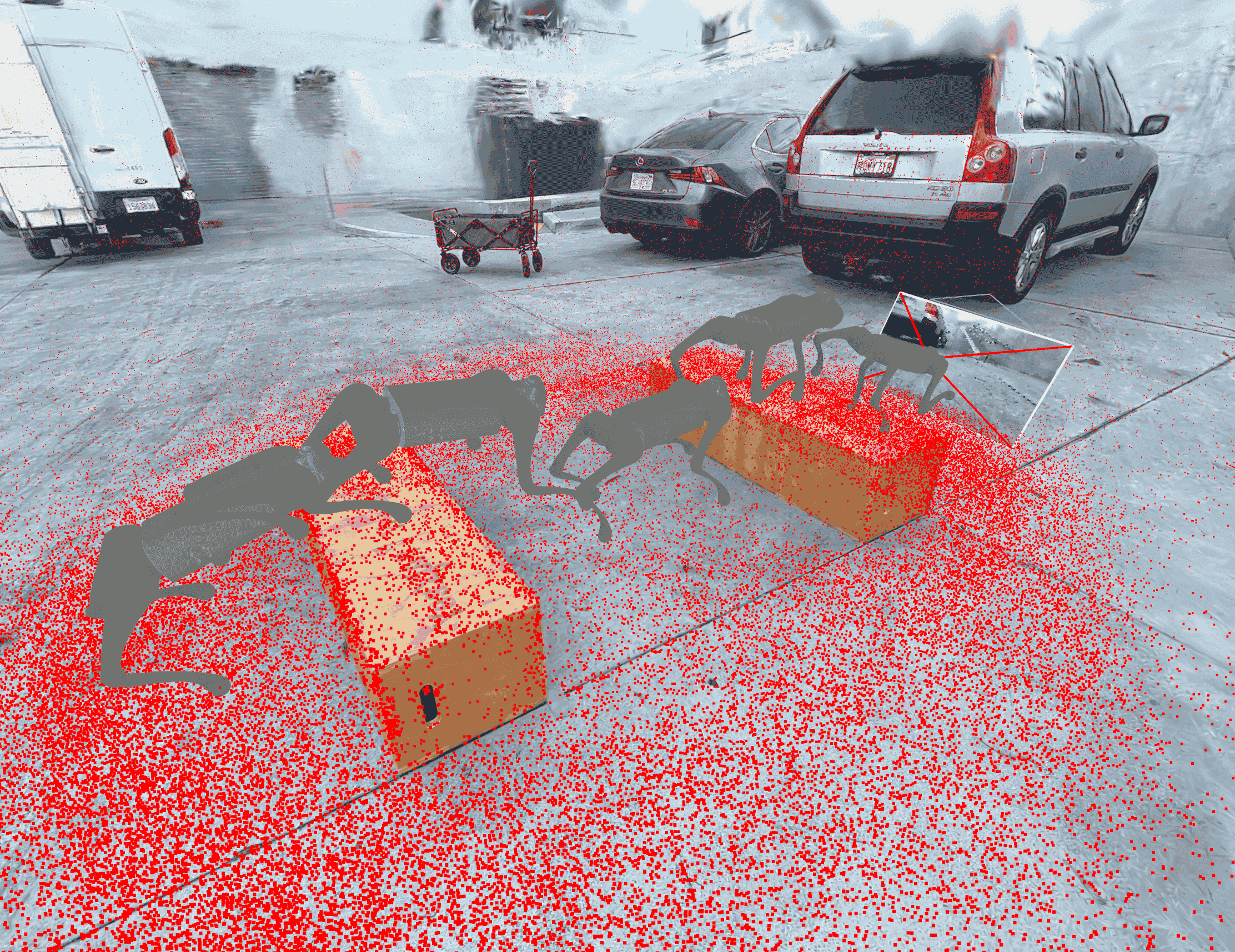

The Neverwhere Visual Parkour Benchmark Suite

Under Review

We offer over sixty high-quality Gaussian splatting-based evaluation environments, and the Neverwhere graphics tool-chain for producing accurate collision mesh. Our aim is to promote reproducible robotics research via fully automated, continuous testing in closed-loop evaluation.

LucidSim: Learning Visual Parkour from Generated Images

Conference on Robot Learning (CoRL), 2024

We use generative models as a realistic and diverse data source by augmenting classical physics simulators. We demonstrate that robots trained this way can accomplish highly dynamic tasks like parkour without requiring depth.

Distilled Feature Fields Enable Few-Shot Language-Guided Manipulation

Conference on Robot Learning (CoRL), 2023 (Best paper award)

We distill features from 2D foundation models into 3D feature fields, and enable few-shot language-guided manipulation that generalizes across object poses, shapes, appearances and categories.

Teaching

🤖 TA for MIT Robotics: Science and Systems (6.4200), Spring 2024 + 2025.